Torchserve Canary Deployment

Deploying Torchserve model over EKS using Canary Strategy

eks-config.yaml

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

iam:

withOIDC: true

metadata:

name: basic-cluster

region: ap-south-1

version: "1.27"

managedNodeGroups:

- name: ng-dedicated-1

instanceType: t3a.xlarge

desiredCapacity: 4

spot: true

labels:

role: spot

ssh:

allow: true # will use ~/.ssh/id_rsa.pub as the default ssh key

iam:

withAddonPolicies:

autoScaler: true

awsLoadBalancerController: true

certManager: true

externalDNS: true

ebs: trueCreate the Cluster

eksctl create cluster -f eks-config.yamlInstall KServe with KNative and ISTIO

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yamlkubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.11.0/serving-crds.yamlkubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.11.0/serving-core.yamlkubectl apply -l knative.dev/crd-install=true -f https://github.com/knative/net-istio/releases/download/knative-v1.11.0/istio.yaml

kubectl apply -f https://github.com/knative/net-istio/releases/download/knative-v1.11.0/istio.yamlkubectl apply -f https://github.com/knative/net-istio/releases/download/knative-v1.11.0/net-istio.yamlkubectl patch configmap/config-domain \

--namespace knative-serving \

--type merge \

--patch '{"data":{"emlo.tsai":""}}'kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.11.0/serving-hpa.yamlkubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.13.2/cert-manager.yamlWait for cert manager pods to be ready

kubectl apply -f https://github.com/kserve/kserve/releases/download/v0.11.2/kserve.yamlWait for KServe Controller Manager to be ready

kubectl apply -f https://github.com/kserve/kserve/releases/download/v0.11.2/kserve-runtimes.yamlCreate EBS Controller

eksctl create iamserviceaccount \

--name ebs-csi-controller-sa \

--namespace kube-system \

--cluster basic-cluster \

--role-name AmazonEKS_EBS_CSI_DriverRole \

--role-only \

--attach-policy-arn arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy \

--approve \

--region ap-south-1eksctl create addon --name aws-ebs-csi-driver --cluster basic-cluster --service-account-role-arn arn:aws:iam::006547668672:role/AmazonEKS_EBS_CSI_DriverRole --region ap-south-1 --forceCreate the Storage Controller

sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: standard

provisioner: ebs.csi.aws.com

volumeBindingMode: WaitForFirstConsumerk apply -f sc.yaml`

Create S3 Service Account

s3.yaml

apiVersion: v1

kind: Secret

metadata:

name: s3creds

annotations:

serving.kserve.io/s3-endpoint: s3.ap-south-1.amazonaws.com # replace with your s3 endpoint e.g minio-service.kubeflow:9000

serving.kserve.io/s3-usehttps: "1" # by default 1, if testing with minio you can set to 0

serving.kserve.io/s3-region: "ap-south-1"

serving.kserve.io/s3-useanoncredential: "false" # omitting this is the same as false, if true will ignore provided credential and use anonymous credentials

type: Opaque

stringData: # use `stringData` for raw credential string or `data` for base64 encoded string

AWS_ACCESS_KEY_ID: AxxxxQxxxxxxxxY2xxx

AWS_SECRET_ACCESS_KEY: "C/dGcccuAxxxxxxxx25mxxxxxxx"

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: s3-read-only

secrets:

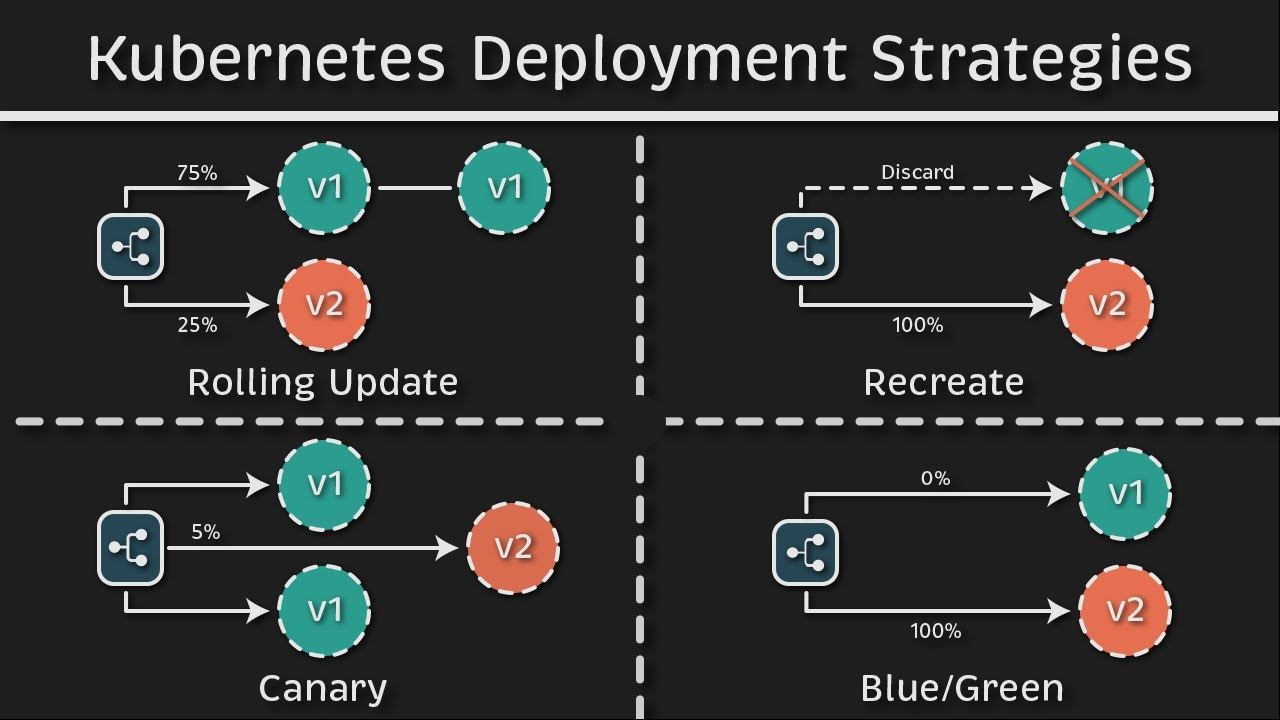

- name: s3credsKubernetes Deployment Strategies

Canary Deployment using NGINX: https://kubernetes.github.io/ingress-nginx/examples/canary/

Canary Deployment using ISTIO: https://istio.io/v1.10/blog/2017/0.1-canary/

KServe Canary Deployment

- Overview:

- Canary rollouts in Kubernetes are supported by KServe for inference services.

- Enables deploying a new version of an InferenceService to receive a percentage of traffic.

- Configurable Canary Rollout Strategy:

- KServe supports a customizable canary rollout strategy with multiple steps.

- Rollout strategy includes provisions for rollback to the previous revision if a step fails.

- Automatic Tracking:

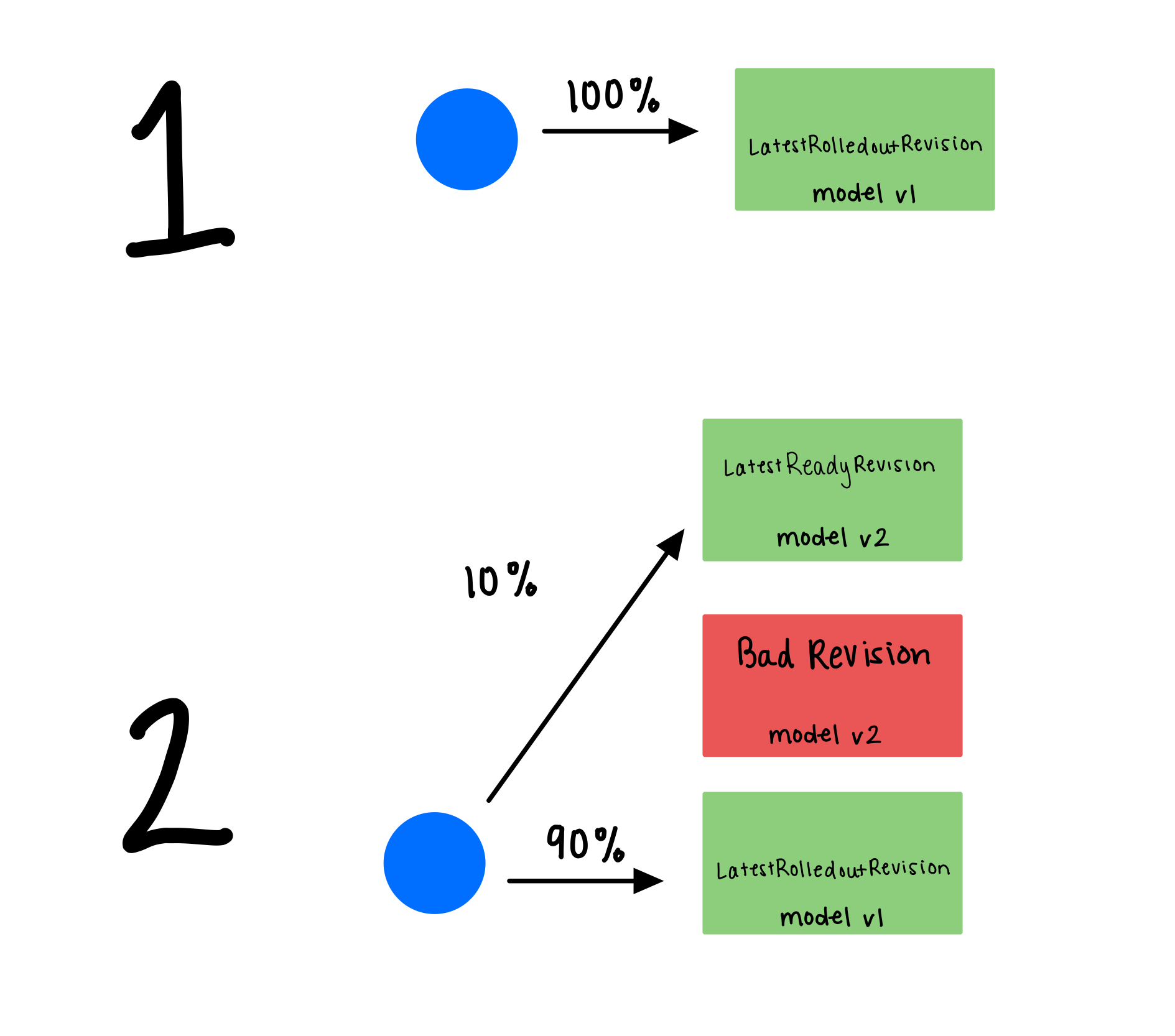

- KServe automatically tracks the last good revision rolled out with 100% traffic.

- The

canaryTrafficPercentfield in the component's spec sets the traffic percentage for the new revision.

- Traffic Splitting:

- During canary rollout, traffic is split between the last good revision and the new revision based on

canaryTrafficPercent. - The first revision deployed receives 100% traffic.

- In subsequent steps, if 10% traffic is configured for the new revision, 90% goes to the LatestRolledoutRevision.

- During canary rollout, traffic is split between the last good revision and the new revision based on

- Handling Unhealthy Revisions:

- If a revision is unhealthy or bad, traffic is not routed to it.

- In case of a rollback, 100% traffic is directed to the previous healthy revision, the PreviousRolledoutRevision.

- Rollout Steps:

- Step 1: Deploy the first revision, receives 100% traffic.

- Step 2: Deploy multiple revisions, route a configured percentage to the new revision.

- Step 3: Promote the LatestReadyRevision to the LatestRolledoutRevision, receiving 100% traffic and completing the rollout.

Note: Canary deployments allow controlled testing of new versions before full deployment, minimizing risks and ensuring a smooth transition.

vit-classifier.yaml

apiVersion: "serving.kserve.io/v1beta1"

kind: "InferenceService"

metadata:

name: "imagenet-vit"

spec:

predictor:

serviceAccountName: s3-read-only

model:

modelFormat:

name: pytorch

storageUri: s3://tsai-emlo/kserve-ig-2/imagenet-vit/

resources:

limits:

cpu: 2600m

memory: 4GiThis is our usual KServe deployment, nothing fancy

❯ kg isvc

NAME URL READY PREV LATEST PREVROLLEDOUTREVISION LATESTREADYREVISION AGE

imagenet-vit http://imagenet-vit.default.emlo.tsai True 100 imagenet-vit-predictor-00001 3m12sNow let’s deploy the cat classifier model as the new canary candidate

We’ll modify the cat-classifier to advertise it’s model name as vit-classifier

aws s3 cp --recursive s3://tsai-emlo/kserve-ig-2/cat-classifier/ cat-classifier/inference_address=http://0.0.0.0:8085

management_address=http://0.0.0.0:8085

metrics_address=http://0.0.0.0:8082

grpc_inference_port=7070

grpc_management_port=7071

enable_envvars_config=true

install_py_dep_per_model=true

load_models=all

max_response_size=655350000

model_store=/mnt/models/model-store

default_response_timeout=600

enable_metrics_api=true

metrics_format=prometheus

number_of_netty_threads=4

job_queue_size=10

model_snapshot={"name":"startup.cfg","modelCount":1,"models":{"vit-classifier":{"1.0":{"defaultVersion":true,"marName":"cat-classifier.mar","minWorkers":1,"maxWorkers":1,"batchSize":1,"maxBatchDelay":100,"responseTimeout":600}}}}aws s3 cp cat-classifier/config/config.properties s3://tsai-emlo/kserve-ig-2/cat-classifier/config/config.propertieshttps://base64.guru/converter/encode/image

Convert this to base64

http://images.cocodataset.org/val2017/000000039769.jpg

input.json

{

"instances": [

{

"data": "BASE64 IMAGE"

}

]

}import requests

import json

url = "http://abd5bc101d01a4478baa58570709a6f6-1419869784.ap-south-1.elb.amazonaws.com/v1/models/imagenet-vit:predict"

with open("input.json") as f:

payload = json.load(f)

headers = {

'Host': 'imagenet-vit.default.emlo.tsai',

'Content-Type': 'application/json'

}

response = requests.request("POST", url, headers=headers, json=payload)

print(response.text){

"predictions": [

{

"class": "Egyptian cat",

"probability": 0.9374412894248962

}

]

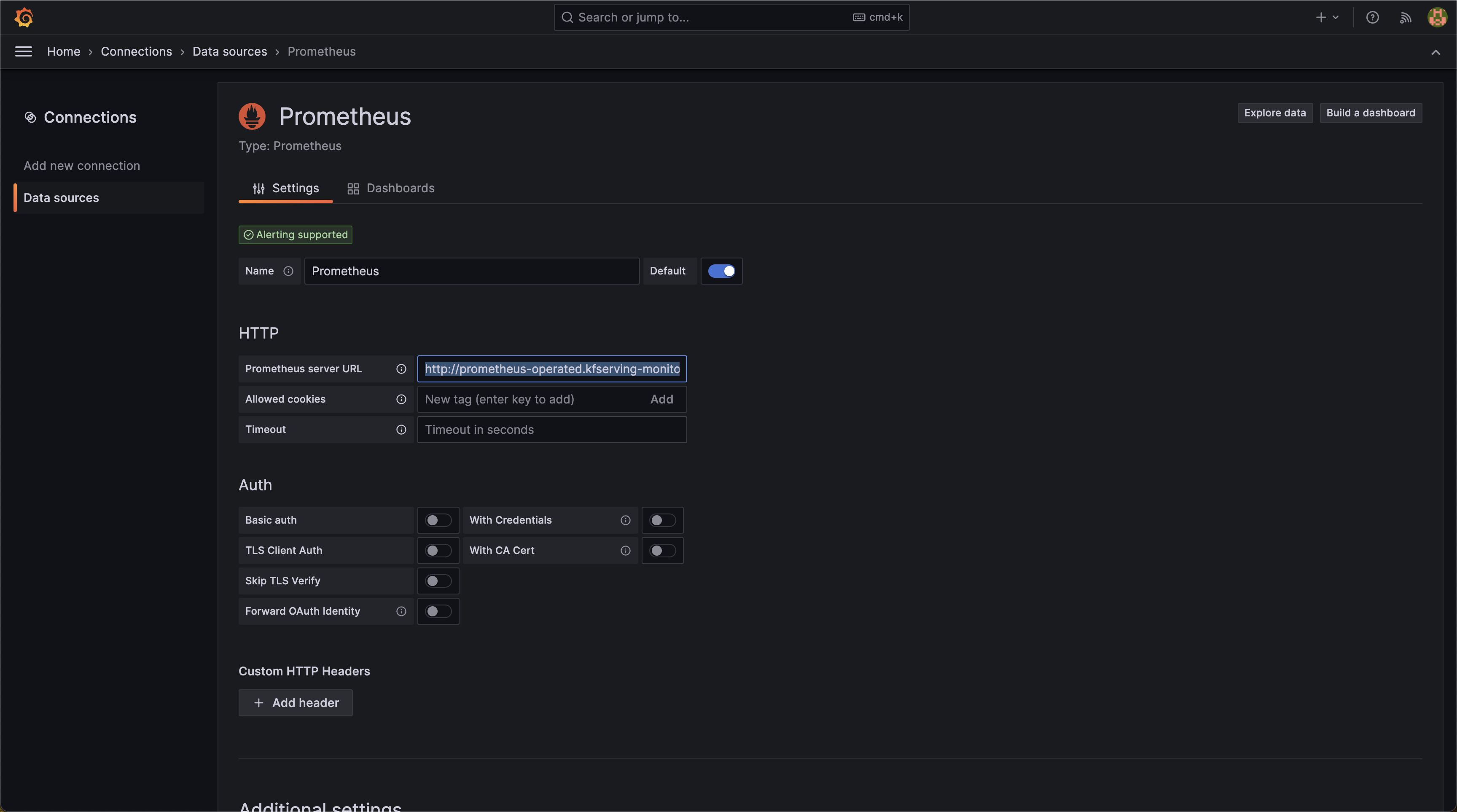

}Prometheus & Grafana

Install Prometheus

https://github.com/kserve/kserve/blob/master/docs/samples/metrics-and-monitoring/README.md

Install Kustomize on your system

https://kubectl.docs.kubernetes.io/installation/kustomize/

git clone https://github.com/kserve/kservecd kservekustomize build docs/samples/metrics-and-monitoring/prometheus-operator | kubectl apply -f -

kubectl wait --for condition=established --timeout=120s crd/prometheuses.monitoring.coreos.com

kubectl wait --for condition=established --timeout=120s crd/servicemonitors.monitoring.coreos.com

kustomize build docs/samples/metrics-and-monitoring/prometheus | kubectl apply -f -Test if Prometheus is working

kubectl port-forward service/prometheus-operated -n kfserving-monitoring 9090:9090We need to patch KServe’s Logging to log all Prometheus Metrics

If an InferenceService uses Knative, then it has at least two containers in one pod, queue-proxy and kserve-container. A limitation of using Prometheus is that it supports scraping only one endpoint in the pod. When there are multiple containers in a pod that emit Prometheus metrics, this becomes an issue (see Prometheus for multiple port annotations issue #3756 for the full discussion on this topic). In an attempt to make an easy-to-use solution, the queue-proxy is extended to handle this use case.

https://github.com/kserve/kserve/blob/master/qpext/README.md

qpext_image_patch.yaml

data:

queue-sidecar-image: kserve/qpext:latestkubectl patch configmaps -n knative-serving config-deployment --patch-file qpext_image_patch.yamlNOTE: You will need to delete your deployment and redeploy to use the new qpext image we just patched

Install Grafana

helm install grafana grafana/grafanaNOTE: would be a good idea to move it to another namespace

Fetch the admin user’s password from secrets

kubectl get secret --namespace default grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echoPort forward Grafana

kubectl port-forward svc/grafana 3000:80Add a New Data Source in Grafana

Add this as the server url

http://prometheus-operated.kfserving-monitoring.svc.cluster.local:9090Add this Dashboard

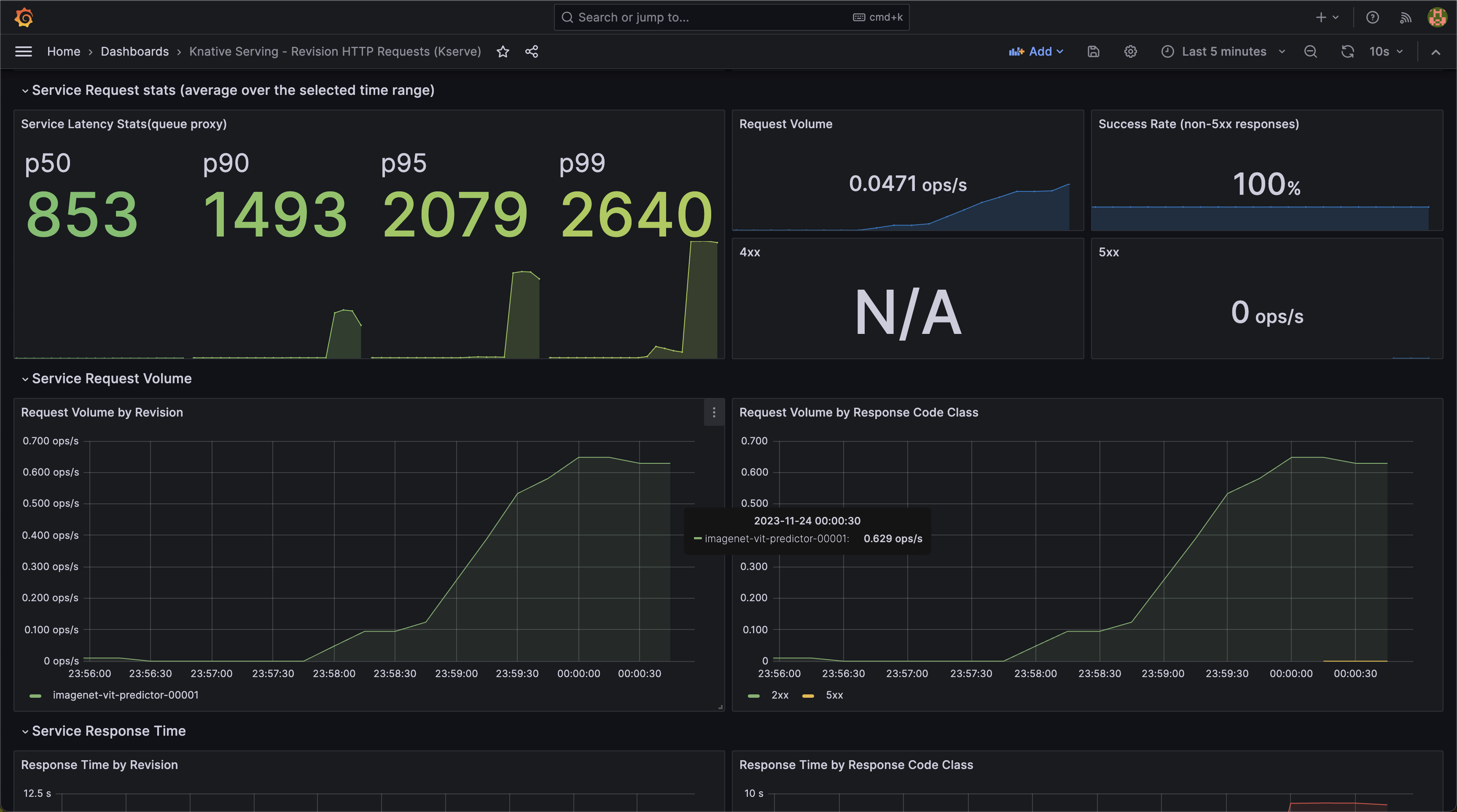

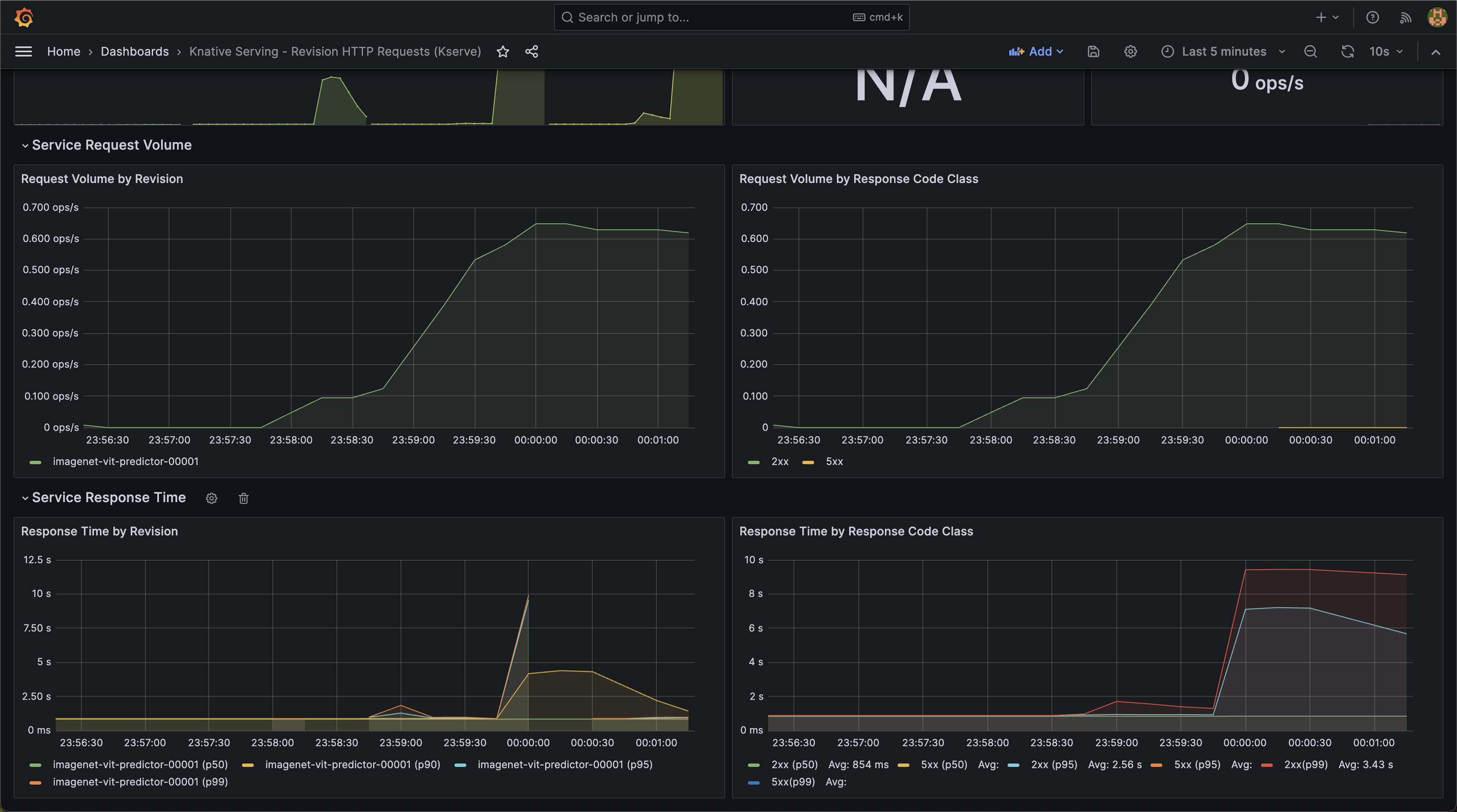

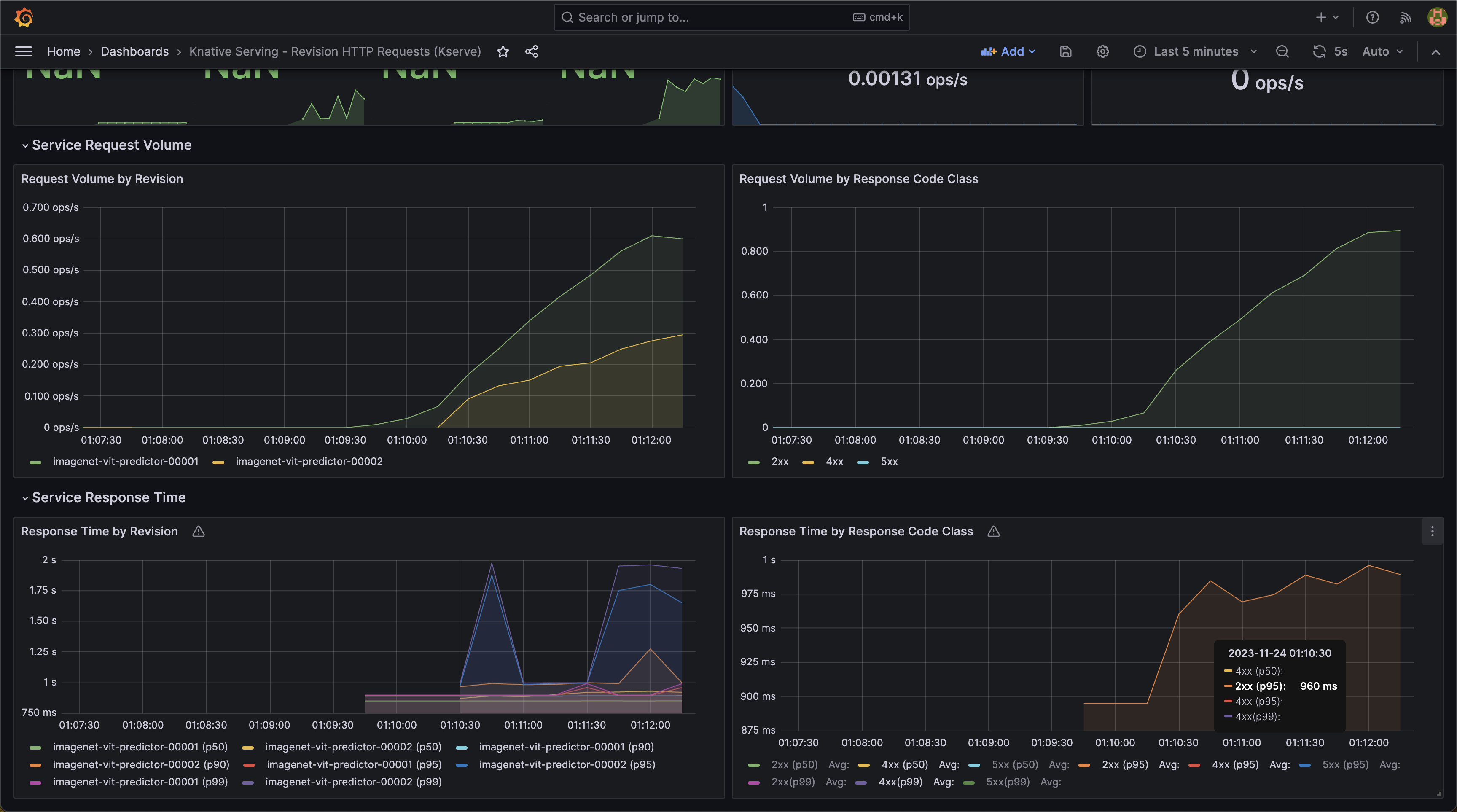

https://grafana.com/grafana/dashboards/18032-knative-serving-revision-http-requests/

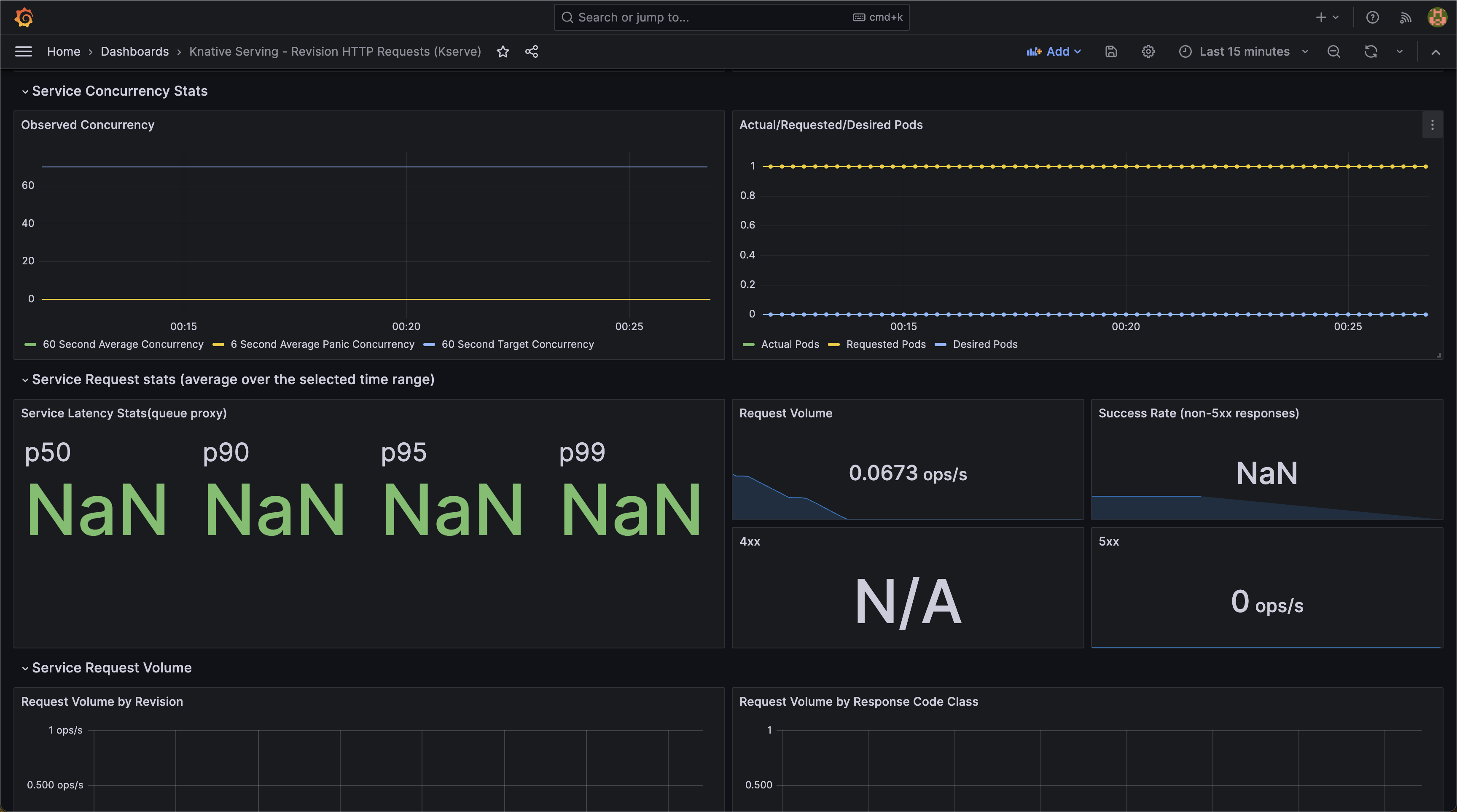

Now add some load to the model

send.py

import requests

import json

url = "http://abd5bc101d01a4478baa58570709a6f6-1419869784.ap-south-1.elb.amazonaws.com/v1/models/imagenet-vit:predict"

with open("input.json") as f:

payload = json.load(f)

headers = {

'Host': 'imagenet-vit.default.emlo.tsai',

'Content-Type': 'application/json'

}

response = requests.request("POST", url, headers=headers, json=payload)

print(response.headers)

print(response.status_code)

print(response.json())You should start seeing some stats about the requests

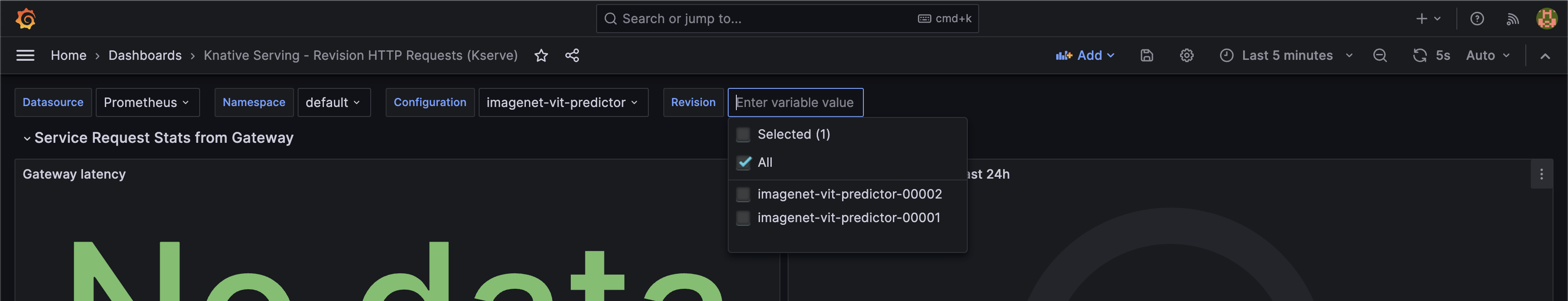

Canary Deployment

Now we are ready for a Canary Deployment and Observability with Prometheus & Grafana

vit-classifier.yaml

apiVersion: "serving.kserve.io/v1beta1"

kind: "InferenceService"

metadata:

name: "imagenet-vit"

annotations:

serving.kserve.io/enable-metric-aggregation: "true"

serving.kserve.io/enable-prometheus-scraping: "true"

spec:

predictor:

canaryTrafficPercent: 30

serviceAccountName: s3-read-only

model:

modelFormat:

name: pytorch

# storageUri: s3://tsai-emlo/kserve-ig-2/imagenet-vit/

storageUri: s3://tsai-emlo/kserve-ig-2/cat-classifier/

resources:

limits:

cpu: 2600m

memory: 4Gi❯ kg isvc

NAME URL READY PREV LATEST PREVROLLEDOUTREVISION LATESTREADYREVISION AGE

imagenet-vit http://imagenet-vit.default.emlo.tsai True 70 30 imagenet-vit-predictor-00001 imagenet-vit-predictor-00002 73mStart sending requests

for i in {1..200}; do python send.py ; done

Lot of 4xx Errors!

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '56', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:12:35 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '1'}

404

{'error': 'Model with name imagenet-vit does not exist.'}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:12:35 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '750'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:12:36 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '764'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:12:37 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '751'}

200This is because the mar file for cat-classifier still advertises the model name to be cat-classifier

Time to Rollback!

apiVersion: "serving.kserve.io/v1beta1"

kind: "InferenceService"

metadata:

name: "imagenet-vit"

annotations:

serving.kserve.io/enable-metric-aggregation: "true"

serving.kserve.io/enable-prometheus-scraping: "true"

spec:

predictor:

canaryTrafficPercent: 0

serviceAccountName: s3-read-only

model:

modelFormat:

name: pytorch

# storageUri: s3://tsai-emlo/kserve-ig-2/imagenet-vit/

storageUri: s3://tsai-emlo/kserve-ig-2/cat-classifier/

resources:

limits:

cpu: 2600m

memory: 4Gi❯ kg isvc

NAME URL READY PREV LATEST PREVROLLEDOUTREVISION LATESTREADYREVISION AGE

imagenet-vit http://imagenet-vit.default.emlo.tsai True 100 0 imagenet-vit-predictor-00001 imagenet-vit-predictor-00002 89mNow the PREV model will go to 100% and the new model will go down to 0% traffic

All of our requests are now going through

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:18:43 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '751'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:18:44 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '754'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:18:45 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '765'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:18:46 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '754'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:18:47 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '766'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:18:48 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '743'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:18:49 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '781'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:18:50 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '744'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:18:51 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '768'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:18:52 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '755'}

200We can fix the .mar file by recreating cat-classifier .mar but with model name as imagenet-vit

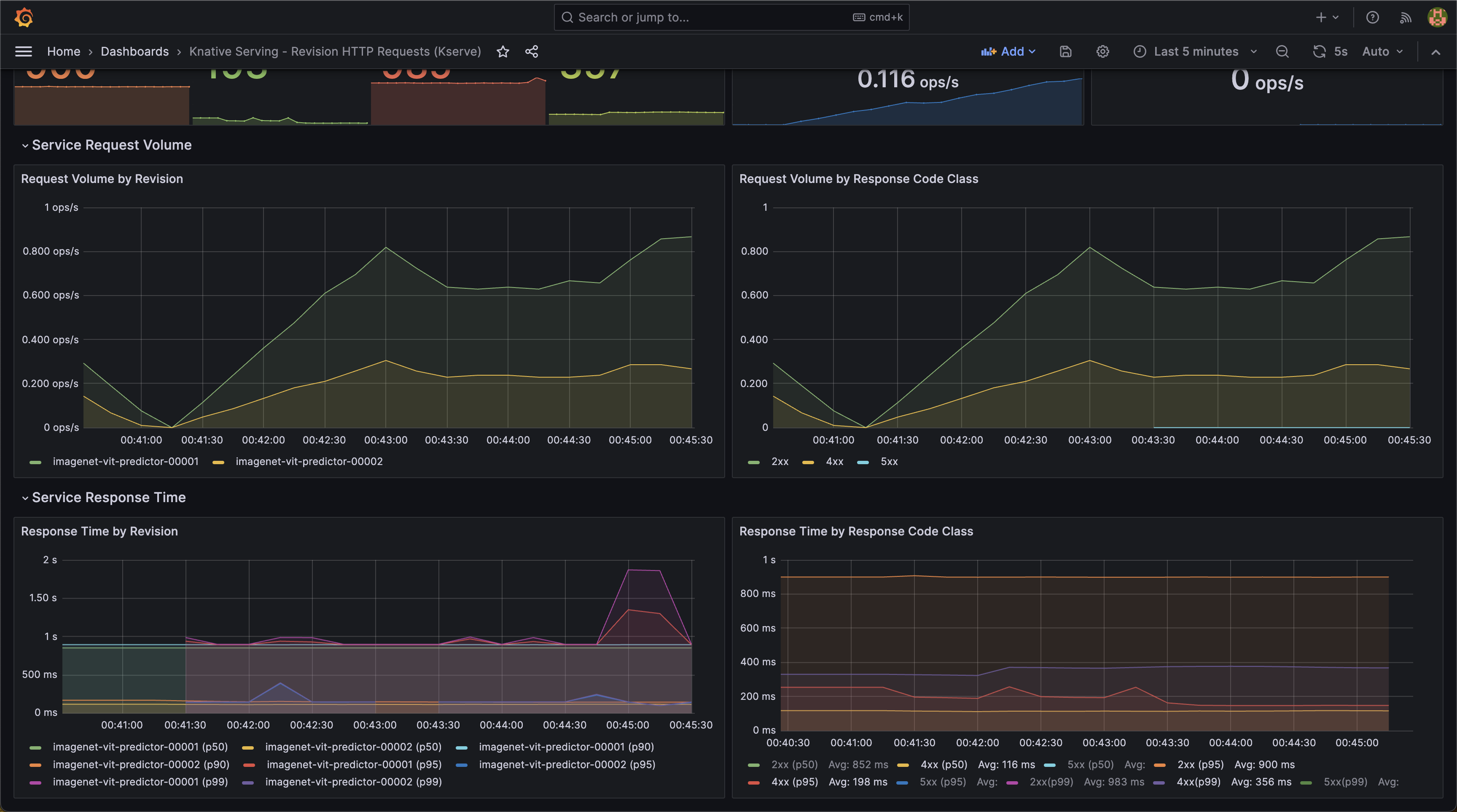

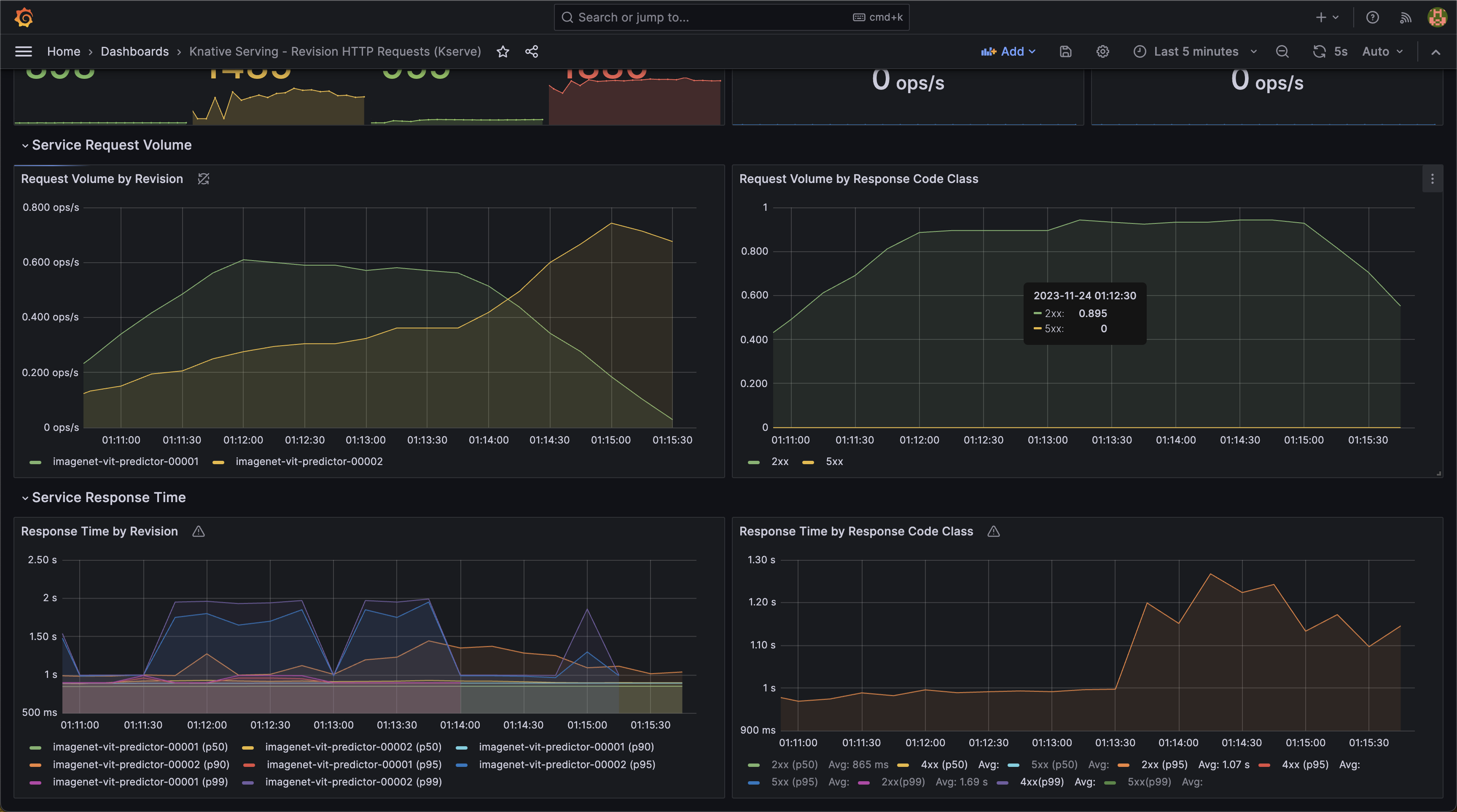

After fixing

Some of the responses are Bengal Cat !

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:40:51 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '745'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:40:52 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '744'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:40:53 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '761'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:40:55 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '848'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:40:55 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '755'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:40:57 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '792'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:40:58 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '837'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:40:59 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '816'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:41:00 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '743'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:41:01 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '772'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:41:02 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '782'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:41:03 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '860'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:41:04 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '746'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:41:05 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '778'}

200

{'predictions': [{'class': 'Egyptian cat', 'probability': 0.9374412894248962}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:41:06 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '899'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '75', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:41:07 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '742'}

200No More Errors! 🪄

We can promote our new model by just removing canaryTrafficPercent

apiVersion: "serving.kserve.io/v1beta1"

kind: "InferenceService"

metadata:

name: "imagenet-vit"

annotations:

serving.kserve.io/enable-metric-aggregation: "true"

serving.kserve.io/enable-prometheus-scraping: "true"

spec:

predictor:

serviceAccountName: s3-read-only

model:

modelFormat:

name: pytorch

# storageUri: s3://tsai-emlo/kserve-ig-2/imagenet-vit/

storageUri: s3://tsai-emlo/kserve-ig-2/cat-classifier/

resources:

limits:

cpu: 2600m

memory: 4Gi200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:44:27 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '779'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:44:28 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '795'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:44:29 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '767'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:44:30 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '778'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:44:32 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '789'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:44:33 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '798'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:44:34 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '781'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:44:35 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '767'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:44:36 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '922'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:44:37 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '775'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:44:38 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '774'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:44:39 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '787'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69', 'content-type': 'application/json', 'date': 'Thu, 23 Nov 2023 19:44:40 GMT', 'server': 'istio-envoy', 'x-envoy-upstream-service-time': '825'}

200

{'predictions': [{'class': 'Bengal', 'probability': 0.5737159252166748}]}

{'content-length': '69'Look at the Request Volume by Revision, our canary model is now promoted and older version of model is getting no requests

NOTES: Route Traffic using Tags: https://kserve.github.io/website/0.11/modelserving/v1beta1/rollout/canary-example/#route-traffic-using-a-tag